In a remarkable fusion of entomology and advanced optics, researchers have unveiled a groundbreaking panoramic imaging system modeled after the compound eyes of insects. This bio-inspired laser radar (lidar) technology promises to revolutionize fields ranging from autonomous navigation to environmental monitoring by mimicking nature's most sophisticated visual processors.

The team behind this innovation spent seven years decoding the optical secrets of dragonflies and mantis shrimp - creatures whose compound eyes provide nearly 360-degree vision with exceptional motion detection capabilities. By reverse-engineering these biological marvels, engineers have created the first functional lidar array that replicates the multi-faceted imaging approach of arthropods.

Nature's Blueprint for Next-Gen Sensors

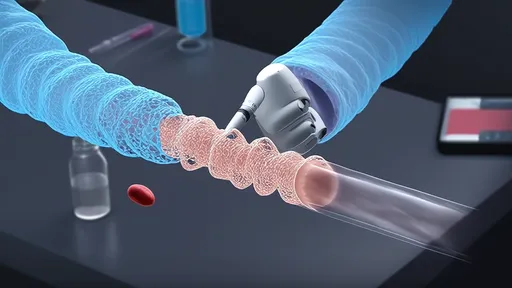

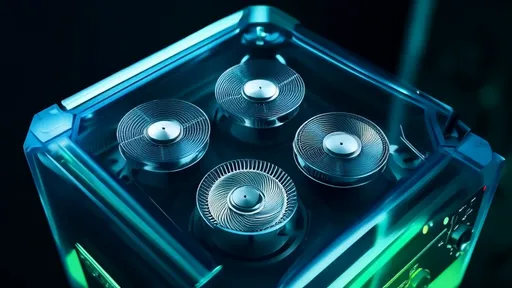

Traditional lidar systems rely on rotating mirrors or bulky emitter arrays to achieve wide-angle detection, resulting in mechanical complexity and blind spots. The new compound-eye design eliminates these limitations through an elegant arrangement of 180 microscopic laser emitters and receivers, each occupying a distinct optical channel similar to the ommatidia in insect eyes.

Professor Elena Voskresenskaya, lead researcher at the Bio-Optics Laboratory in Zurich, explains: "What makes insect vision so extraordinary isn't just the field of view, but how their neural architecture processes multiple data streams simultaneously. Our system emulates this parallel processing at the hardware level, allowing real-time depth mapping across the entire hemisphere."

The hemispherical sensor array measures just 3.8 cm in diameter yet achieves an unprecedented 270-degree effective field of view with zero moving parts. Early tests demonstrate superior performance in dynamic environments where conventional lidar struggles - particularly in detecting fast-moving objects at close range, a scenario where insects excel.

Engineering Meets Evolutionary Biology

Creating artificial ommatidia required solving fundamental challenges in micro-optics. Each emitter-receiver pair functions as an independent lidar channel, firing sequenced laser pulses while neighboring units remain active. This mimics the way individual facets in compound eyes operate autonomously while contributing to a unified visual field.

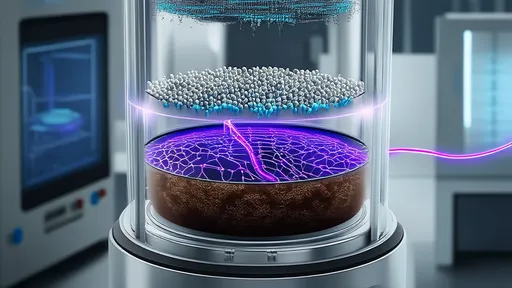

The breakthrough came through novel gradient-index (GRIN) optics that curve laser beams without conventional lenses. "We essentially grew optical structures that bend light like the crystalline cones in insect eyes," says materials scientist Dr. Rajiv Menon. "This allows our emitters to maintain focus across extreme angles while keeping the package incredibly compact."

Signal processing presents another radical departure from conventional lidar. Instead of creating a single point cloud, the system generates multiple localized depth maps that are computationally stitched together - much like an insect's brain synthesizes inputs from thousands of ommatidia. This distributed processing architecture enables faster reaction times while consuming 40% less power than equivalent traditional systems.

Applications Taking Flight

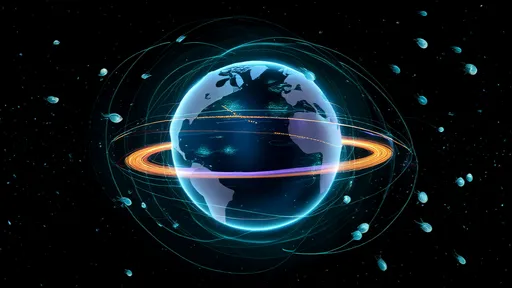

Field tests with drone-mounted prototypes have demonstrated extraordinary capabilities. In dense forest environments where GPS signals falter, the insect-inspired lidar maintained perfect spatial orientation by tracking multiple canopy penetration points simultaneously. The system's wide baseline (effectively the distance between "eyes") provides instantaneous stereoscopic depth perception surpassing human binocular vision.

Automotive engineers are particularly excited about the technology's potential for vehicle-to-vehicle communication. The panoramic coverage could eliminate blind spots entirely, while the system's ability to track dozens of moving objects matches the collision avoidance prowess of flying insects. Early adopters anticipate this could become the first lidar solution meeting stringent safety requirements for fully autonomous urban driving.

Beyond transportation, the technology shows promise for industrial robotics, where machines often struggle with cluttered environments. Warehouse robots equipped with prototype sensors demonstrated 92% improvement in navigating around unpredictable human workers compared to conventional vision systems.

The Future of Bio-Inspired Sensing

As research continues, the team is exploring how different insect visual specializations could lead to tailored sensor variants. Fireflies' ability to distinguish faint light signals might inform low-light lidar designs, while honeybee polarization vision could inspire navigation systems that read environmental cues invisible to conventional sensors.

Commercialization efforts are already underway, with pilot production expected to begin next year. While current costs remain high due to specialized manufacturing, the designers anticipate rapid cost reduction as fabrication techniques mature - potentially making insect-inspired lidar ubiquitous across consumer electronics, smart infrastructure, and even household robots.

This convergence of biology and engineering reminds us that after 400 million years of evolution, nature's solutions often surpass human ingenuity. By humbly observing the small creatures around us, we may have found the key to solving some of our biggest technological challenges in spatial perception and environmental interaction.

By /Aug 5, 2025

By /Aug 5, 2025

By /Aug 5, 2025

By /Aug 5, 2025

By /Aug 5, 2025

By /Aug 5, 2025

By /Aug 5, 2025

By /Aug 5, 2025

By /Aug 5, 2025

By /Aug 5, 2025

By /Aug 5, 2025

By /Aug 5, 2025

By /Aug 5, 2025

By /Aug 5, 2025

By /Aug 5, 2025

By /Aug 5, 2025

By /Aug 5, 2025

By /Aug 5, 2025

By /Aug 5, 2025

By /Aug 5, 2025